More data isn’t always better! This post will go over why and how we removed uninformative variables from a modeling dataset using a custom-built neural network architecture along with cross-checks using more traditional supervised learning algorithms. The end result is a better curated dataset for our model-building process.

The Problem

This is kind of weird, right? All you hear about is everyone purchasing data and yet we’re removing some of it from our modeling table? Why?

Here at Civis, we solve many data science problems ranging from whether someone will vote to who they think will win at the Academy Awards. Solving these problems in different domains requires a dataset that includes hundreds of variables.

However, such a wide dataset has its drawbacks. Importing and exporting datasets is time consuming, and model building is much more computationally expensive with more variables. Reducing the number of variables, and therefore the size of our dataset, reduces costs in terms of employee wait time and rented computing time.

Our hypothesis is that not all of the variables we use contribute to the predictive performance of the models we build. This could be because some variables contain similar information to others or some variables may not be useful for the problems we try to solve. Also, we have found that including uninformative variables in a model can decrease performance relative to using the subset of important variables. Therefore, we set out to remove redundant or uninformative variables from our dataset without decreasing (and possibly increasing) model performance.

The Methodology

How can we remove unnecessary variables from a dataset? With Data Science of course!

We started with over 300 response variables representative of the problems we face. The goal is to retain a subset of our current explanatory variables that can predict these response variables with the same or better predictive performance as using all the explanatory variables.

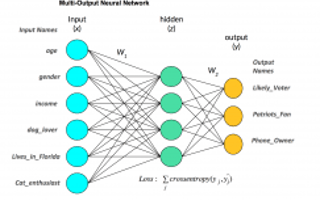

Since the we don’t know the functional form of the response variables in advance we began with a multi-output neural network. For each example, a neural network takes a vector of inputs (in this case our explanatory variables) and passes them through several nonlinear functions that can output predictions for a response variable. By passing the features through nonlinear functions, the neural network is able to make a highly predictive model with little human intervention. This framework is easily adapted to the multiple-output scenario and is shown above.

Since the we don’t know the functional form of the response variables in advance we began with a multi-output neural network. For each example, a neural network takes a vector of inputs (in this case our explanatory variables) and passes them through several nonlinear functions that can output predictions for a response variable. By passing the features through nonlinear functions, the neural network is able to make a highly predictive model with little human intervention. This framework is easily adapted to the multiple-output scenario and is shown above.

We then included an extra element-wise multiplication layer with an associated lasso (L1) penalty to the traditional multi-output neural network framework in order to perform variable selection. This penalty on explanatory variables allows the the model to ‘zero out’ non-important coefficients as we increase the lasso-penalty. For instance, if a particular variable’s weight in the lasso-penalty layer (W0) is zero then the neural network’s output will not depend on it.

Indeed, the W0 weights can be considered a variable importance index and it correlates highly with variable importance permutation tests. This technique can be seen as a neural network extension of the multi-task lasso, where a single model jointly selects variables. However it’s more general than a multi-task lasso since it can handle interactions and nonlinearities.

Testing Methodology

We tested this methodology on a simulated dataset to see whether it can select the subset of important variables. This generated dataset included 2 dependent variables which are Friedmanfunctions of five random normal variables each. The Friedman function has interactions and nonlinearities that would not be picked up by penalized regressions and should therefore be a good test of whether this method will work on our datasets. An additional 90 random normal variables are included in the dataset to simulate unimportant variables. The test, then, is whether our methodology can distinguish the 10 informative variables from the 90 uninformative variables.

The graph above shows a subset of W0 weights plotted against the lasso penalty with the generated dataset. Each line in the graph corresponds to a single explanatory variable in the model. As the lasso-penalty increases, the coefficients decrease but at different rates. There is clearly a bimodal distribution among the coefficients at the 10-4 lasso-penalty; the coefficients that are close to zero are the noise variables, and the variables that have W0 weights near 0.4 are the important variables. Since the method separates the important from the unimportant variables in a multi-output test setting, we felt confident that it would give valid results when applied to our modeling dataset.

Model Building and Validation

We used grid search and cross validation to find the optimal lasso-penalty along with other hyperparameters for the multi-output lasso neural network. With this fitted model we could have used the fitted W0 weights to remove the zeroed-out coefficients and finished the variable selection task.

However, as an added sanity check we used the fitted W0 weights as a variable importance ranking and ran more traditional classifiers of each response variable on the top 100, 200, 300, … , and all explanatory variables. If the W0 weights calculated the true variable importance metric, we expect to see that increasing the number of variables in this manner would lead to decreasing marginal increase in AUC and eventually a “plateau” in AUC1.

Above is a plot of AUC by number of explanatory variables (up to 300) for several response variables.

AUCs are fairly consistent for most response variables regardless of number of explanatory variables suggesting that the variable selection methodology chooses the most important variables, and the model performance generally seems to plateau with a relatively small number of explanatory variables. It’s worth noting that some variables’ AUC performance can increase as the number of variables decreasing signifying that adding ‘noise’ variables into models can reduce performance. To aggregate results we calculated the percent change in AUCs from a reduced subset of explanatory variables to the full dataset.

Results and Conclusions

The results of this technique were surprising. We found that we can remove approximately half of our explanatory variables with no reduction in average percent change of AUC of response variables. Interestingly, almost all of the predictive ability of our dataset was in the top 10% of variables. However, we did find that as we reduced the number of variables in models, the percent changes for the response variables did not decrease at the same rate suggesting that the model selection was favoring some response variables over others.

While our analysis suggests we could remove up to half of our current number of variables, we started by only removing 10%. The reasons for this conservative reduction are twofold.

We were cautious in removing variables because radically changing the dataset that goes into a large number of our products should not be taken lightly. In addition, it will take time for people to get used to the concept of ‘less is more’, especially in terms of data quality vs size so we started off small with the intention of removing more variables later.

Reducing the size of our data by 10% might not seem like a lot, but since we run hundreds of models a week, it adds up. This not only saves time but also computing resources which helps our bottom line one model at a time.